The scope of this project was to create an AI journal for people with visual impairments.

At-a-glance

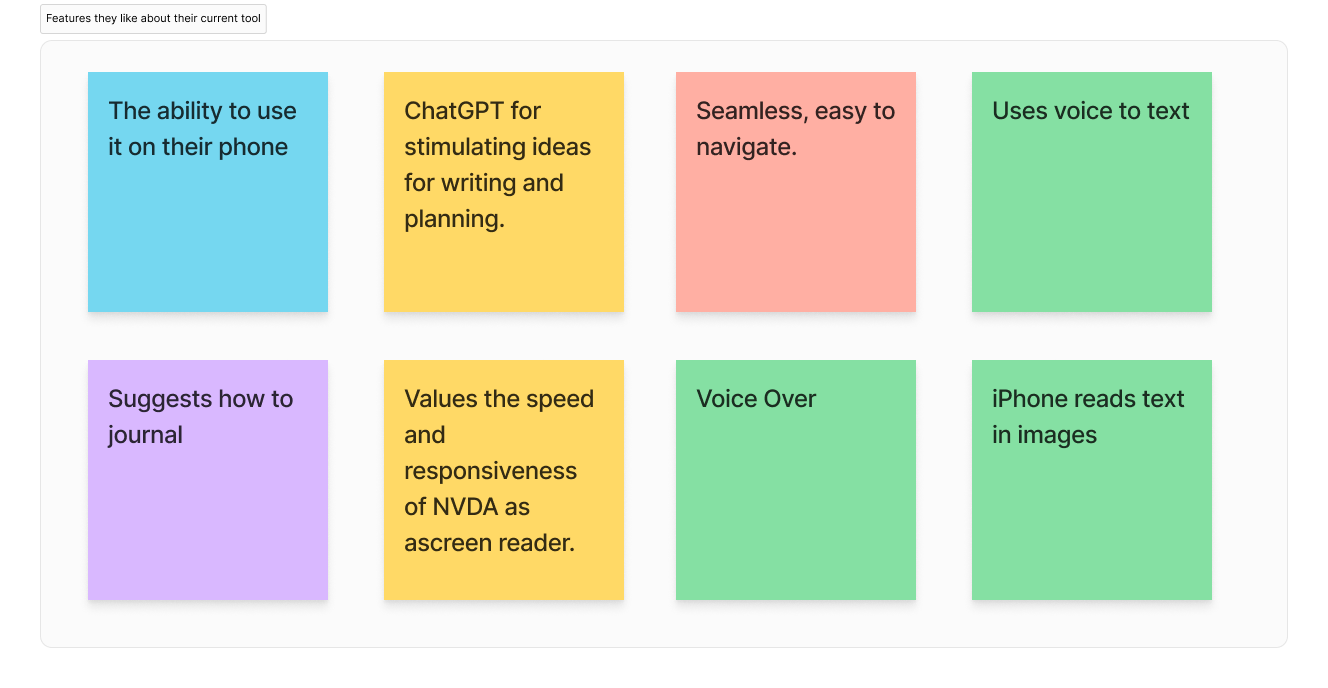

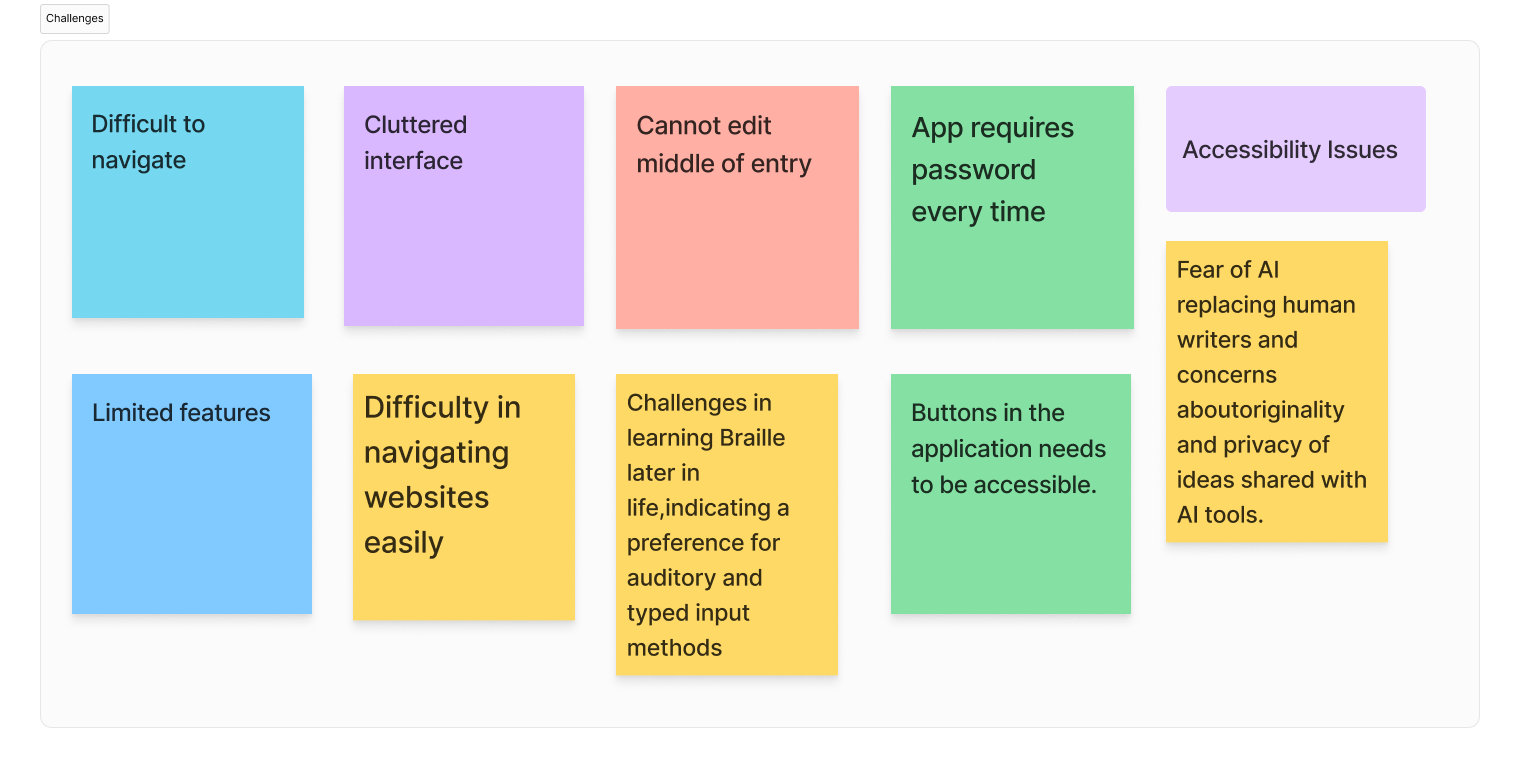

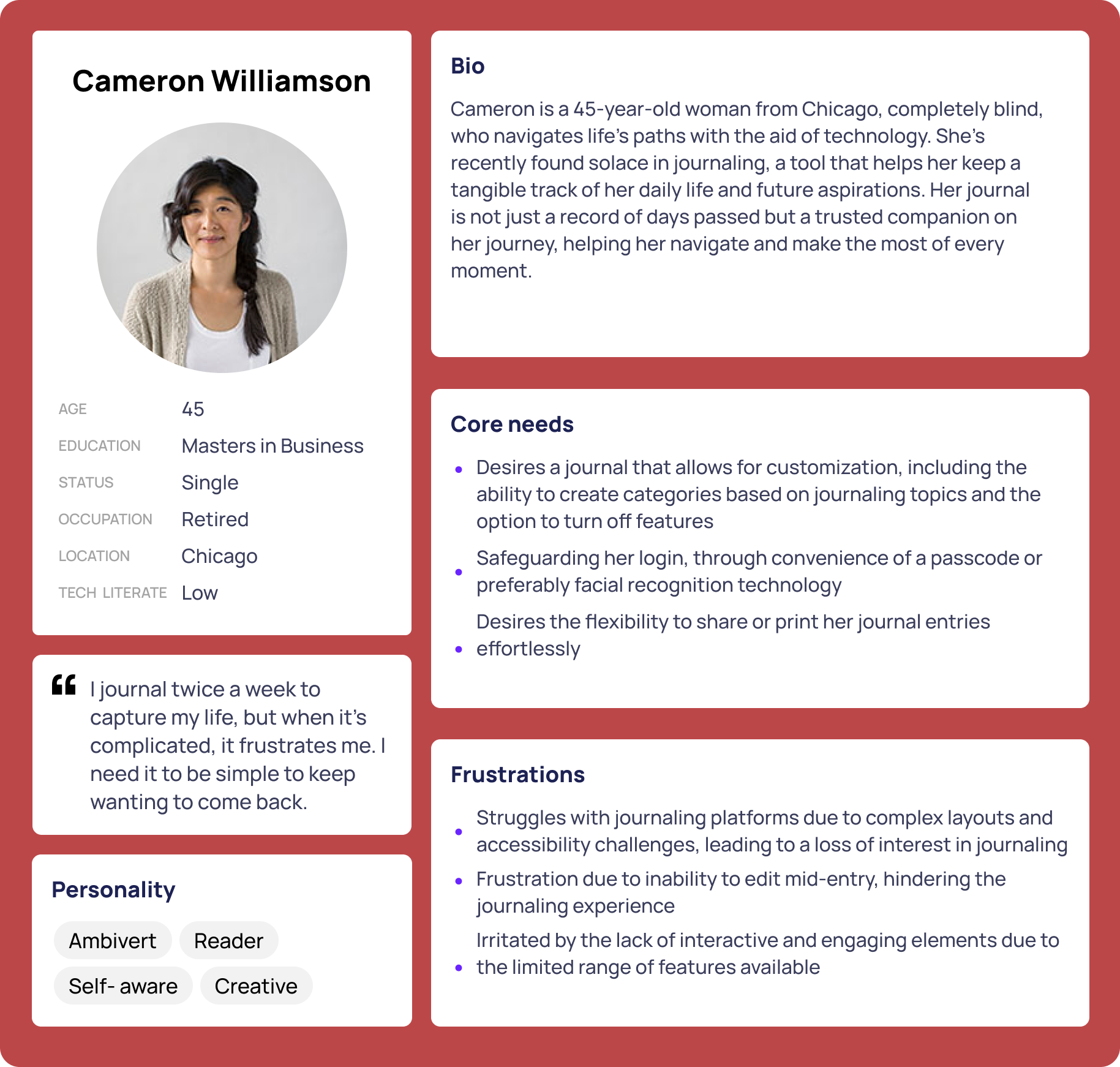

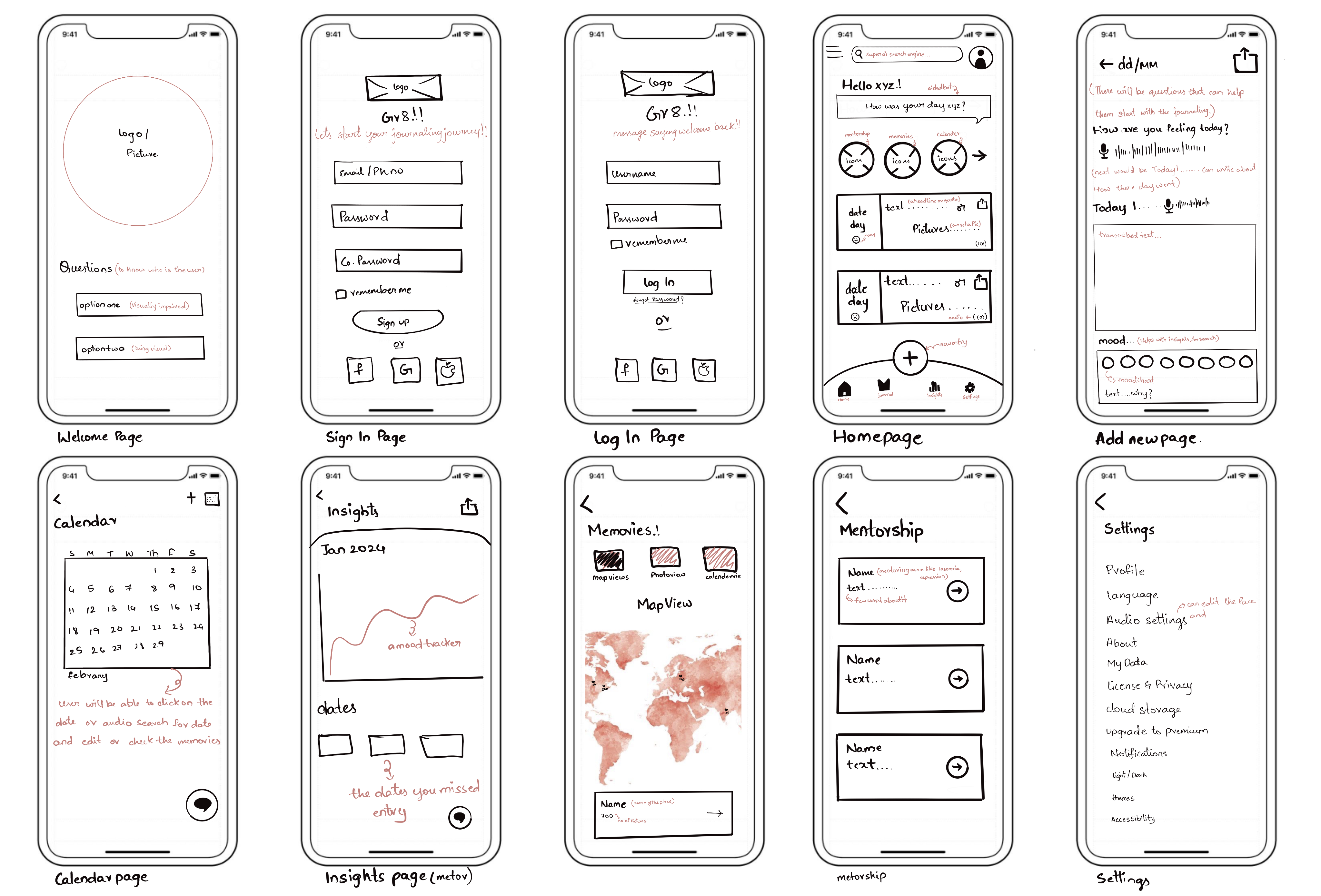

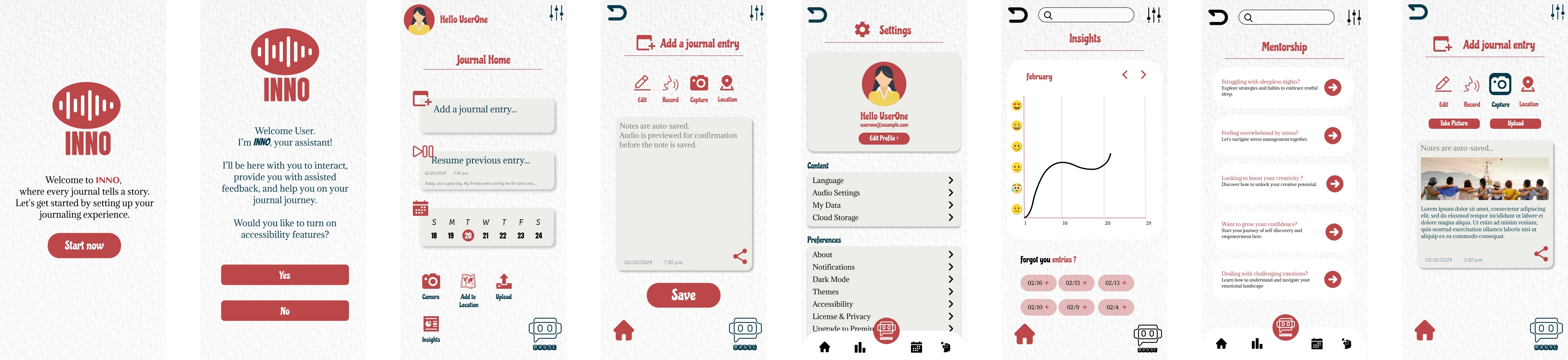

People with visual impairments (VI) have a strong desire for journaling, but current tools lack accessibility features that would make journaling easier and more productive. Most VI users rely on built-in apps like Notes for journaling, as using third-party apps can be tedious. The process of creating this AI-powered journal involved studying current research, conducting a competitor analysis, followed by research interviews with VI individuals and surveys with sighted users. Once we understood our user groups and the necessary features, we began creating wireframes. Alongside this, we performed card sorts to establish a strong information architecture. Finally, once the prototypes were ready, we conducted usability tests. We found that there are very limited products focusing on the needs of the visually impaired, likely due to the difficulty of conducting usability tests with VI individuals, especially when prototypes are created using typical software like Figma.

Focus areas

- Literature review, competitor analysis, user interviews

- Information architecture, wireframing, prototyping, content creation, usability testing

01 LITERATURE REVIEW

Our initial focus was to create an AI journal with better features and performance than existing products. During the competitor analysis, we discovered that none of the existing products included accessibility features. This led us to reorient and focus the product on the journaling needs of the visually impaired. As accessibility features are typically an added layer to a base product, we also considered sighted users as a secondary audience.

Although we found limited research papers focusing on technology created specifically for VI users, several papers helped us understand the requirements and guidelines for optimum accessibility for visually impaired users. We also learned about conducting research with VI users, market gaps, and the need for more inclusive mobile applications. Additionally, some papers concluded that machine learning for human support could be highly beneficial, provided ethical considerations and the use of bots in customer service are addressed.

02 COMPETITOR ANALYSIS

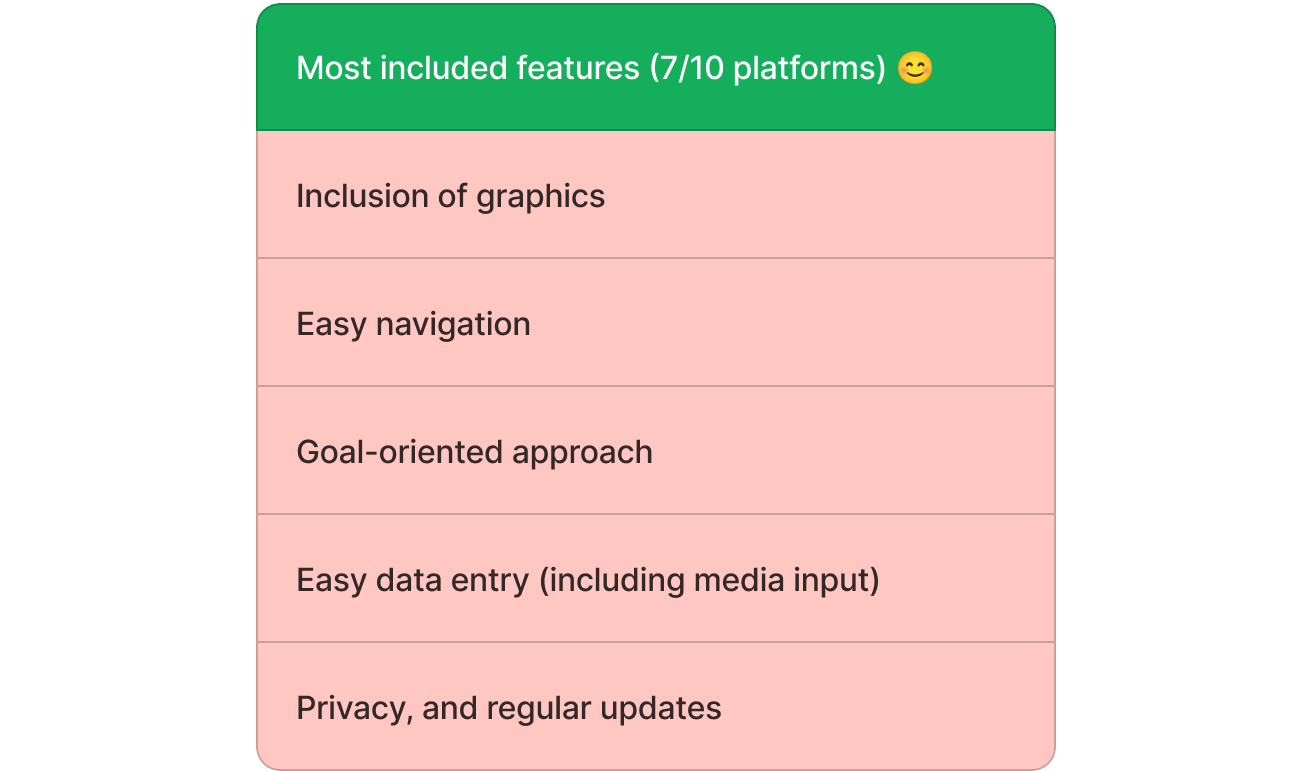

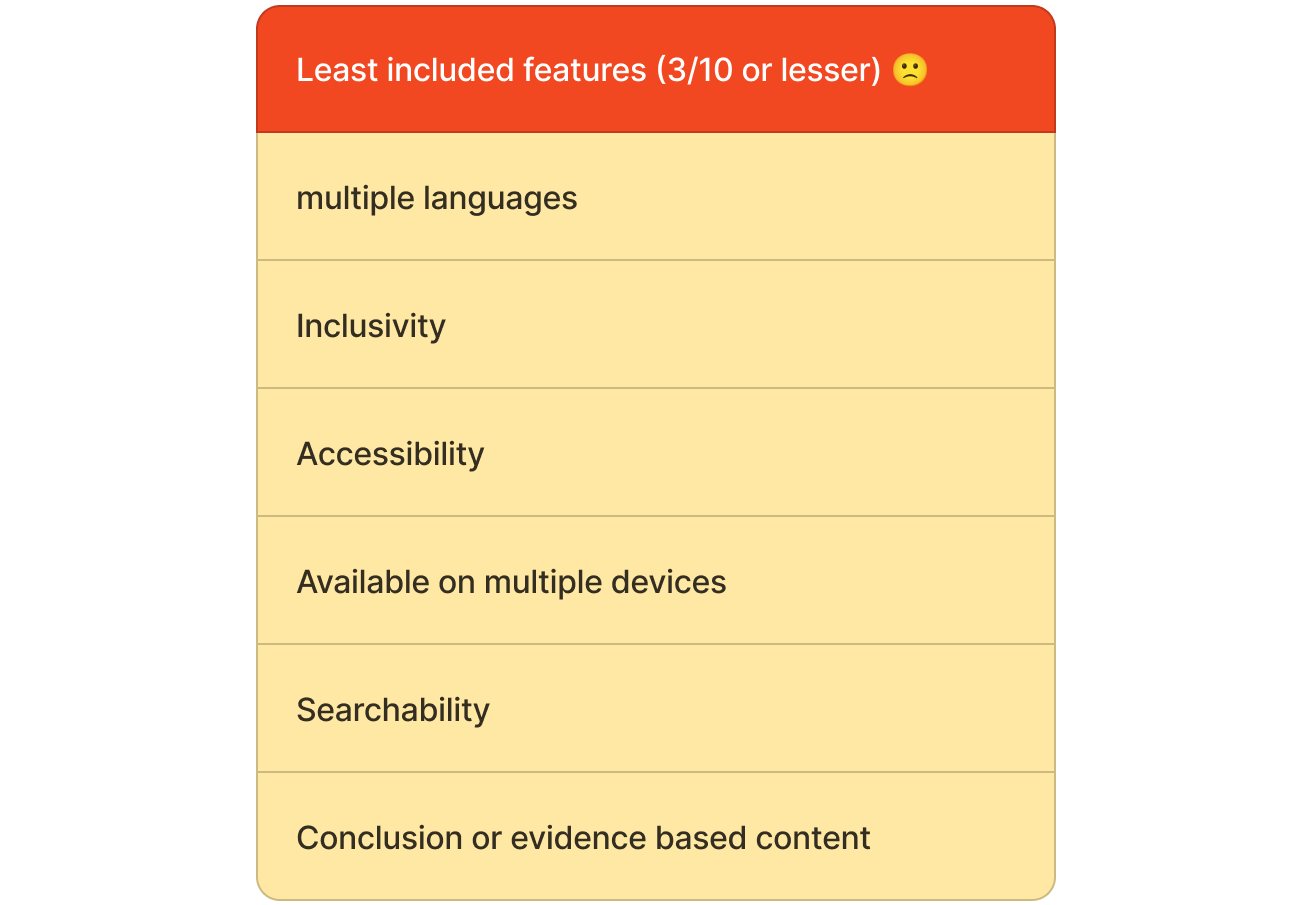

- We modified the evaluation framework by Sharma et al., 2022 (Evaluation of mHealth Apps for Diverse, Low-Income Patient Populations: Framework Development and Application Study) to suit our specific evaluation purposes for the competitor analysis.

- We assessed 10 mobile apps and websites.